DOE for Method Development

Design of experiments can be applied to many aspects of method development; however, the following will provide the typical steps for designing and analyzing experiments for analytical methods.

• Define the purpose (e.g., repeatability, intermediate precision, accuracy, LOD/LOQ linearity, resolution).

• Define the range of concentrations the method will be used to measure and the solution matrix it will be measured in.

• Develop/define the reference standards for bias and accuracy studies.

• Define the steps in the method and any associated documentation.

• Determine the responses that are aligned to the purpose of the study.

• Complete a risk assessment of all materials, equipment, analysts, and method components aligned to the purpose of the study and the key responses that will be quantified.

• Design the experimental matrix and sampling plan.

• Identify the error control plan and run the study.

• Analyze the study and determine settings and processing conditions that improve method precision and minimize bias errors. Document the design space of the method and associated limits of key factors.

• Run confirmation tests to confirm settings improve precision, linearity, and bias. Evaluate the impact of the method on product acceptance rates and process capability.

Identify the Purpose of the Method Experiment

The purpose of the analytical method experiment should be clear (i.e., repeatability, intermediate precision, linearity, resolution). The structure of the study, the sampling plan, and ranges used in the study all depend on the purpose of the study. Designing a study for accuracy determination is very different from a study that is designed to explore and improve precision. Accuracy, for example, does not require sample replicates to estimate the mean change in the response. Precision, however, requires replicates and duplicates to evaluation variation in the sample preparation and in other aspects of the method. The purpose of the study should drive the study design.

Define the Range of Concentrations to be Evaluated

Define the range of concentrations used to measure and the solution matrix it will be measured in. Ranges of the concentration will generate the characterized design space so they should be selected carefully as it will put restrictions on how the method may be used in the future (see Figure 2). Normally five concentrations should be evaluated per ICH Q2R1.

Define all Reference Standards Used in the Study

Develop/define the reference standards for bias and accuracy studies. Without a well-characterized reference, standard bias/accuracy cannot be determined for the method. Care should be made in selecting, storing, and using reference materials. Stability of the reference is a key consideration and accounting for degradation when replacing standards is critical.

Identify all Steps in the Analytical Method

Lay out the flow or sequence used in the analytical method. Define the steps in the method (e.g., standard operating procedures [SOPs], procedures, or work instructions), all chemistries, reagents, plates, and materials used in the method and all instruments/sensors and equipment. Identify any steps in the process, materials, analyst techniques, or equipment that may influence bias or precision.

Determine the Reponses

Determine the responses that are aligned to the purpose of the study. Raw data and statistical measures such as bias, intermediate precision, signal to noise ratio, and CV are all responses and should be considered as independent results from the method. Make sure the data table is set up so that it can collect the raw data, the statistics can be easily generated from that raw data, and there is a direct link from the statistics to the data.

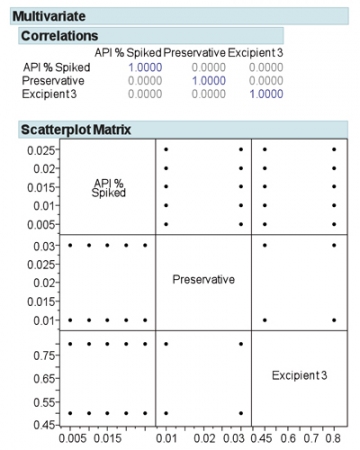

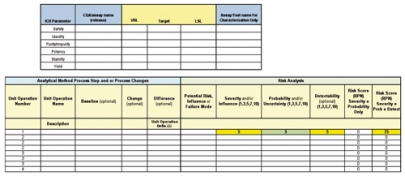

A risk assessment of the analytical method is used to identify areas/steps in the method that may influence precision, accuracy, linearity, selectivity, signal to noise, etc. Specifically, the risk question is: Where do we need characterization and development for this assay? Complete a risk assessment of all materials, equipment, analysts, and method components aligned to the purpose of the study and the key responses. The outcome of the risk assessment is a small set (3 to 8) of risk-ranked factors that may influence the reportable result of the assay. There are many kinds of factors, so factor identification and how to treat the factor in the analysis are crucial to designing valid experiments. There are controllable factors: continuous, discrete numeric, categorical, and mixture. There are uncontrollable factors: covariate and uncontrolled. In addition, there are factors used in error control: blocking and constants (see Figure 3).

Design the Experimental Matrix and Sampling Plan

For small studies using two or three factors, a full factorial type design may be appropriate. When the number of factors rises above three, a D-optimal type custom DOE design should be used to more efficiently explore the design space and determine factors that impact the method. There are many good software programs today that help the user define statistically valid experiments and can be customized to meet the user’s needs.

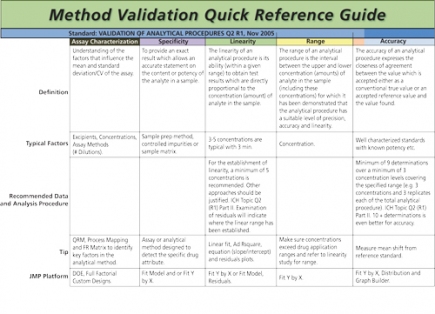

The experimental matrix is one consideration and the sampling plan is another. Replicates and duplicates are essential to quantification of factor influence on precision. Replicates are complete repeats of the method including repeats of the sample preparation, duplicates are single sample preparations but with multiple measurements or injections using the final chemistry and instrumentation. Replicates provide total method variation and duplicates provide instrument, plate, and chemistry precision independent of sample preparation errors. If the experiment is designed properly many of the requirements for method validation (Figure 4) can be directly met from the outcomes of the method DOE.